In my last post, I talked about using Erlang models to size the front end of a system. By using some fundamental capacity models that are almost a century old, you can estimate the number of request handling threads you need for a given traffic load and request duration.

Inside the Box

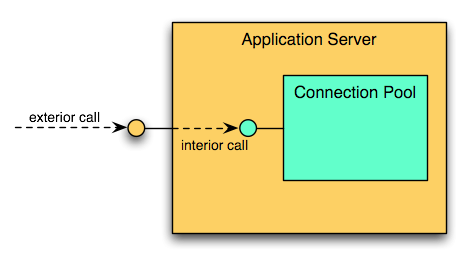

It gets tricky, though, when you start to consider what happens inside the server itself. Processing the request usually involves some kind of database interaction with a connection pool. (There are many ways to avoid database calls, or at least minimize the damage they cause. I'll address some of these in a future post, but you can also check out Two Ways to Boost Your Flagging Web Site for starters.) Database calls act like a kind of "interior" request that can be considered to have its own probability of queuing.

Because this interior call can block, we have to consider what effects it will have on the duration of the exterior call. In particular, the exterior call must take at least the sum of the blocking time plus the processing time for the interior call.

At this point, we need to make a few assumptions about the connection pool. First, the connection pool is finite. Every connection pool should have a ceiling. If nothing else, the database server can only handle a finite number of connections. Second, I'm going to assume that the pool blocks when exhausted. That is, calling threads that can't get a connection right away will happily wait forever rather than abandoning the request. This is a simplifying assumption that I need for the math to work out. It's not a good configuration in practice!

With these assumption in place, I can predict the probability of blocking within the interior call. It's a formula closely related to the Erlang model from my last post, but with a twist. The Erlang models assume an essentially infinite pool of requestors. For this interior call, though, the pool of requestors is quite finite: it's the number of request handling threads for the exterior calls. Once all of those threads are busy, there aren't any left to generate more traffic on the interior call!

The formula to compute the blocking probability with a finite number of sources is the Engset formula. Like the Erlang models, Engset originated in the world of telephony. It's useful for predicting the outbound capacity needed on a private branch exchange (PBX), because the number of possible callers is known. In our case, the request handling threads are the callers and the connection pool is the PBX.

Practical Example

Using our 1,000,000 page views per hour from last time, Table 1 shows the Engset table for various numbers of connections in the pool. This assumes that the application server has a maximum of 40 request handling threads. This also supposes that the database processing time uses 200 milliseconds of the 250 milliseconds we measured for the exterior call.

| N | Engset(N,A,S) |

|---|---|

| 0 | 100.00000% |

| 1 | 98.23183% |

| 2 | 96.37740% |

| 3 | 94.43061% |

| 4 | 92.38485% |

| 5 | 90.23293% |

| 6 | 87.96709% |

| 7 | 85.57891% |

| 8 | 83.05934% |

| 9 | 80.39867% |

| 10 | 77.58656% |

| 11 | 74.61210% |

| 12 | 71.46397% |

| 13 | 68.13065% |

| 14 | 64.60087% |

| 15 | 60.86421% |

| 16 | 56.91211% |

| 17 | 52.73932% |

| 18 | 48.34604% |

| 19 | 43.74105% |

| 20 | 38.94585% |

| 21 | 34.00023% |

| 22 | 28.96875% |

| 23 | 23.94730% |

| 24 | 19.06718% |

| 25 | 14.49235% |

| 26 | 10.40427% |

| 27 | 6.97050% |

| 28 | 4.30152% |

| 29 | 2.41250% |

| 30 | 1.21368% |

| 31 | 0.54082% |

| 32 | 0.21081% |

| 33 | 0.07093% |

| 34 | 0.02028% |

| 35 | 0.00483% |

| 36 | 0.00093% |

| 37 | 0.00014% |

| 38 | 0.00002% |

| 39 | 0.00000% |

| 40 | 0.00000% |

Notice that when we get to 18 connections in the pool, the probability of blocking drops below 50%. Also, notice how sharply the probability of blocking drops off around 23 to 31 connections in the pool. This is a decidedly nonlinear effect!

From this table, it's clear that even though there are 40 request handling threads that could call into this pool, there's not much point in having more than 30 connections in the pool. At 30 connections, the probability of blocking is already less than 1%, meaning that the queuing time is only going to add a few milliseconds to the average request.

Why do we care? Why not just crank up the connection pool size to 40? After all, if we did, then no request could ever block waiting for a connection. That would minimize latency, wouldn't it?

Yes, it would, but at a cost. Increasing the number of connections to the database by a third means more memory and CPU time on the database just managing those connections, even if they're idle. If you've got two app servers, then the database probably won't notice an extra 10 connections. Suppose you scale out at the app tier, though, and you now have 50 or 60 app servers. You'd better believe that the DB will notice an extra 500 to 600 connections. They'll affect memory needs, CPU utilization, and your ability to fail over correctly when a database node goes down.

Feedback and Coupling

There's a strong coupling between the total request duration in the interior call and the request duration for the exterior call. If we assume that every request must go through the database call, then the exterior response time must be strictly greater than the interior blocking time plus the interior processing time.

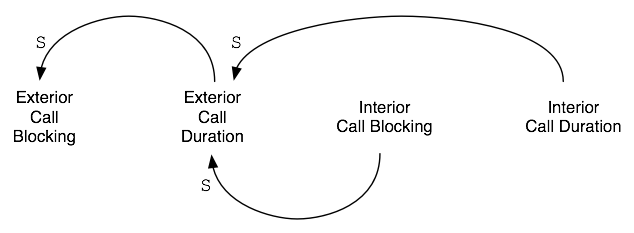

In practice, it actually gets a little worse than that, as this causal loop diagram illustrates.

It reads like this: "As the interior call blocking time increases, the exterior call duration increase. As the interior call blocking increases, the exterior call duration time increases." This type of representation helps clarify relations between the different layers. It's very often the case that you'll find feedback loops this way. Any time you do find a feedback loop, it means that slowdowns will produce increasing slowdowns. Blocking begets blocking, quickly resulting in a site hang.

Conclusions

Queues are like timing dots. Once you start seeing them, you'll never be able to stop. You might even start to think that your entire server farm looks like one vast, interconnected set of queues.

That's because it is.

People use database connection pools because creating new connections is very slow. Tuning your database connection pool size, however, is all about optimizing the cost of queueing against the cost of extra connections. Each connection consumes resources on the database server and in the application server. Striking the right balance starts by identifying the required exterior response time, then sizing the connection pool---or changing the architecture---so the interior blocking time doesn't break the SLA.

For much, much more on the topic of capacity modeling and analysis, I definitely recommend Neil Gunther's website, Performance Agora. His books are also a great---and very practical---way to start applying performance and capacity management.