Agile developers measure their velocity. Most teams define velocity as the number of story points delivered per iteration. Since the size of a "story point" and the length of an iteration vary from team to team, there's not much use in comparing velocity from one team to the next. Instead, the team tracks its own velocity from iteration to iteration.

Tracking velocity has two purposes. The first is estimation. If you know how many story points are left for this release, and you know how many points you complete per iteration, then you know how long it will be until you can release. (This is the "burndown chart".) After two or three iterations, this will be a much better projection of release date than I've ever seen any non-agile process deliver.

The second purpose of velocity tracking is to figure out ways to go faster.

In the iteration retrospective, a team will recalibrate estimating technique, to see if they can actually estimate the story cards or backlog items. Second, they'll look at ways to accomplish more during an iteration. Maybe that's refactoring part of the code, or automating some manual process. It might be as simple as adding templates to the IDE for commonly recurring code patterns. (That should always raise a warning flag, since recurring code patterns are a code smell. Some languages just won't let you completely eliminate it, though. And by "some languages" here, I mainly mean Java.)

Going faster should always be better, right? That means the development team is delivering more value for the same fixed cost, so it should always be a benefit, shouldn't it?

I have an example of a case where going faster didn't matter. To see why, we need to look past the boundaries of the development team. Developers often treat software requirements as if they come from a sort of ATM; there's an unlimited reserve of requirement and we just need to decide how many of them to accept into development.

Taking a cue from "Lean Software Development", though, we can look at the end-to-end value stream. The value stream is drawn from the customer's perspective. Step by step, the value stream map shows us how raw materials (requirements) are turned into finished goods. "Finished goods" does not mean code. Code is inventory, not finished goods. A finished good is something a customer would buy. Customers don't buy code. On the web, customers are users, interacting with a fully deployed site running in production. For shrink-wrapped software, customers buy a CD, DVD, or installer from a store. Until the inventory is fully transformed into one of these finished goods, the value stream isn't done.

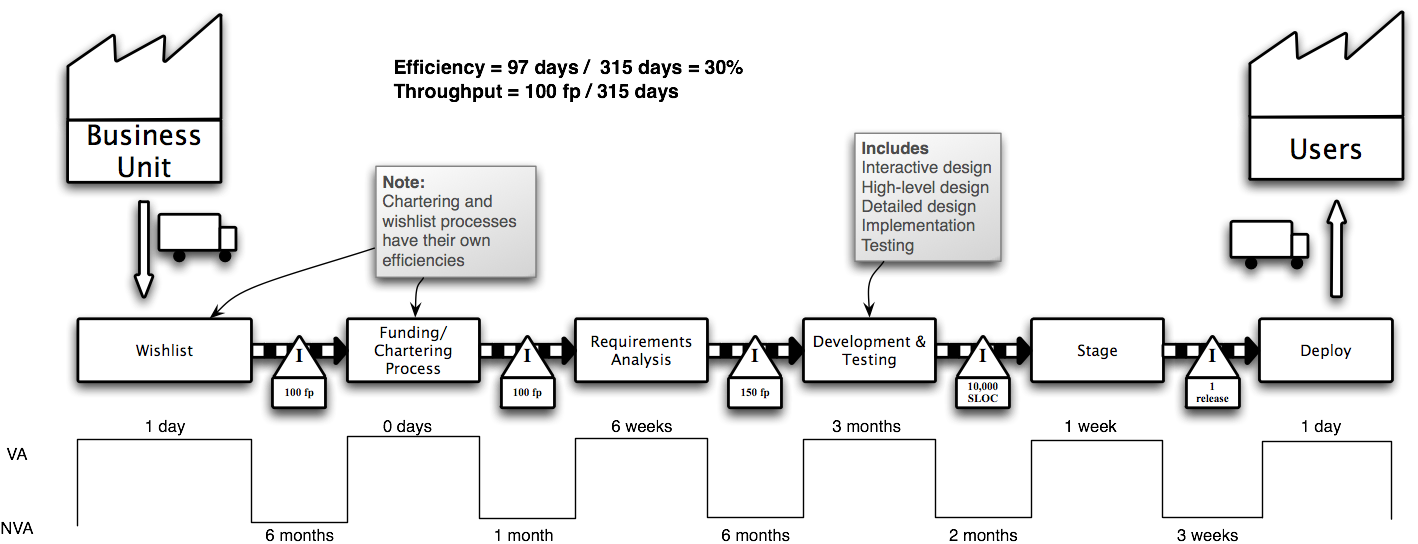

Figure 1 shows a value stream map for a typical waterfall development process. This process has an annual funding cycle, so "inventory" from "suppliers" (i.e., requirements from the business unit) wait, on average, six months to get funded. Once funded and analyzed, they enter the development process. For clarity here, I've shown the development process as a single box, with 100% efficiency. That is, all the time spent in development is spent adding value---as the customer perceives it---to the product. Obviously, that's not true, but we'll treat it as a momentarily convenient fiction. Here, I'm showing a value stream map for a web site, so the final steps are staging and deploying the release.

Figure 1 - Value Stream Map of a Waterfall Process

This is not a very efficient process. It takes 315 business days to go from concept to cash. Out of that time, at most 30% of it is spent adding value. In reality, if we unpack the analysis and development processes, we'll see that efficiency drop to around 5%.

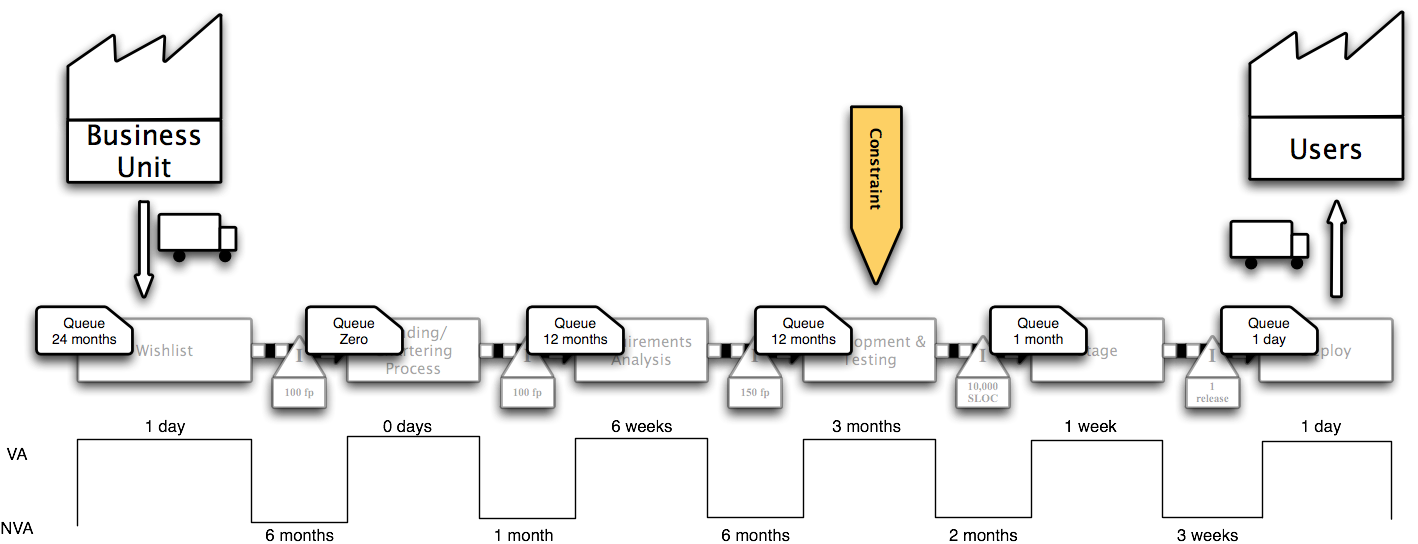

From the "Theory of Constraints", we know that the throughput of any process is limited by exactly one constraint. An easy way to find the constraint is by looking at queue sizes. In an unoptimized process, you almost always find the largest queue right before the constraint. In the factory environment that ToC came from, it's easy to see the stacks of WIP (work in progress) inventory. In a development process, WIP shows up in SCR systems, requirements spreadsheets, prioritization documents, and so on.

Indeed, if we overlay the queues on that waterfall process, as in Figure 2, it's clear that Development and Testing is the constraint. After Development and Testing completes, Staging and Deployment take almost no time and have no queued inventory.

Figure 2 - Waterfall Value Stream, With Queues

In this environment, it's easy to see why development teams get flogged constantly to go faster, produce more, catch up. They're the constraint.

Lean Software Development has ten simple rules to optimize the entire value stream.

ToC says to elevate the constraint and subordinate the entire process to the throughput of the constraint. Elevating the constraint---by either going faster with existing capacity, or expanding capacity---adds to throughput, while running the whole process at the throughput of the constraint helps reduce waste and WIP.

In a certain sense, Agile methods can be derived from Lean and ToC.

All of that, though, presupposes a couple of things:

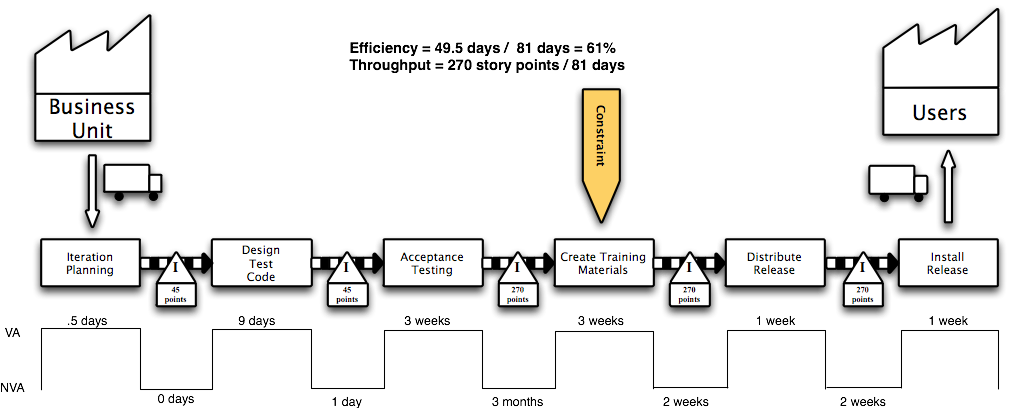

Figure 3 shows the value stream map for a project I worked on in 2005. This project was to replace an existing system, so at first, we had a large backlog of stories to work on. As we approached feature parity, though, we began to run out of stories. The users had been waiting for this system for so long, that they hadn't given much thought, or at least recent thought, to what they might want after the initial release. Shortly after the second release (a minor bug fix), it became clear that we were actually consuming stories faster than they would be produced.

Figure 3 - Value Stream Map of an Agile Project

On the output side, we ran into the reverse problem. This desktop software would be distributed to hundreds of locations, with over a thousand users who needed to be expert on the software in short order. The internal training group, responsible for creating manuals and computer based training videos, could not keep revising their training modules as quickly as we were able to change the application. We could create new user interface controls, metaphors, and even whole screens much faster than they could create training materials.

Once past the training group, a release had to be mastered and replicated onto installation discs. These discs were distributed to the store locations, where associates would call the operations group for a "talkthrough" of the installation process. Operations has a finite capacity, and can only handle so many installations every day. That set a natural throttle on the rate of releases. At one stage---after I rolled off the project---I know that a release which had passed acceptance testing in October was still in the training group by the following March.

In short, the development team wasn't the constraint. There was no point in running faster. We would exhaust the inventory of requirements and build up a huge queue of WIP in front of training and deployment. The proper response would be to slow down, to avoid the buildup of unfinished inventory. Creating slack in the workday would be one way to slow down, but drawing down the team size would be another perfectly valid response. Another perfectly valid response would be to increase the capacity of the training team. There are other places to optimize the value stream, too. But the one thing that absolutely wouldn't help would be increasing the development team's velocity.

For nearly the entire history of software development, there has been talk of the "software crisis", the ever-widening gap between government and industry's need for software and the rate at which software can be produced. For the first time in that history, agile methods allow us to move the constraint off of the development team.